effected by long titles...or I'm in real trouble ;)

So I was working today on something that envolved me testing iSCSI functionality with Windows Server 2008.

While I was waiting for the VM to come up, I set about testing the iSCSI initiator within Windows 7.

What interested me most was a feature called "MCS" which stands for Multiple Connections Per Session and is defined within RFC-3720 and as such a a protocol level feature that allows features we have previously seen with MPIO.

Here is how to get there:

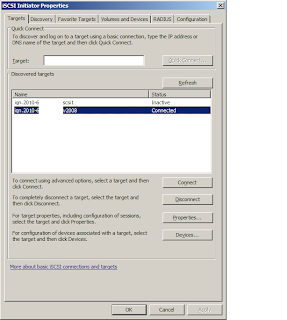

Load the iscsi software from Control Panel->Administative Tools->iSCSI Initiator:

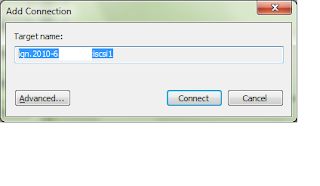

Pic1:

Select the Target from the list click "properties"

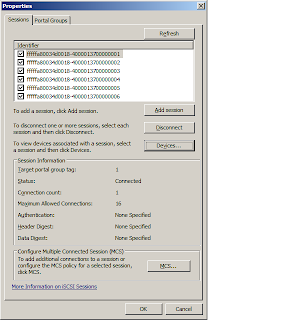

Pic2:

Select the MCS policy you wish to have, I selected "fail over only" which is the same

as "fixed" in MPIO world.

Pic3:

You probably will only have one session at the moment, therfore click "add"

Dont click "connect"!

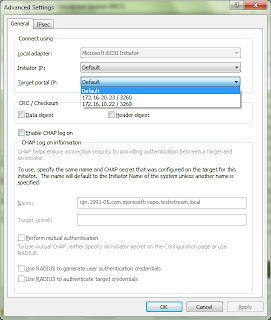

Pic4:

Click "Advanced"

Here is where you pick the other iSCSI target portal.

Pic5:

And thats great! we have a redundant path to our iSCSI targets..but notice this button:

Pic6:

Hmm MPIO is not avalible within Windows 7, which is fine as MCS pretty much gets us to the same place (Inface some say MCS is better) however with Windows Server 2008 we have the option of MPIO so lets give it a go!

First thing to remember is that MPIO is a driver thing so if you have an EMC,3par,netapp,Dell etc device they all have MPIO driver for Windows 2008 so you need to follow their instructions (and look for DSM instructions), here we are using Windows 2008 Software iSCSI Initiator and Windows Server 2008 native MPIO driver.

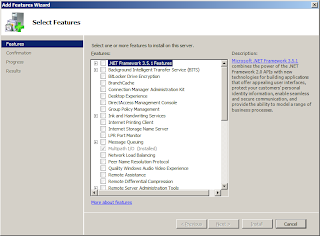

When you install/start iscsi on windows server 2008 it asks you to install MPIO, if you said no..or just forgot install MPIO like this:

From the "Add features Wizard"

Pic1:

Once installed select MPIO from Control Panel click "Add support for iSCSI devices"

then reboot (p.s. here is where you would add the 3rd Party DSM drive btw)

Pic2:

Go Back to the iscsi Initiator (within Administrative tools)

Pic3:

Select the target click properties

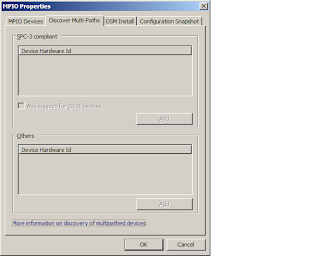

Pic4:

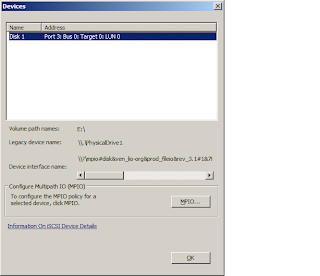

Highlight the sessions click "Devices..."

Pic5:

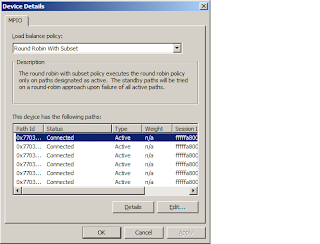

Click MPIO and select the Policy you want

Pic6:

Hope that helps someone out there!

Sources:

http://www.ietf.org/rfc/rfc3720.txt

http://www.windowsitpro.com/article/virtualization2/Q-With-iSCSI-what-s-the-difference-between-Multipath-I-O-MPIO-and-multiple-connections-per-session-MCS-.aspx